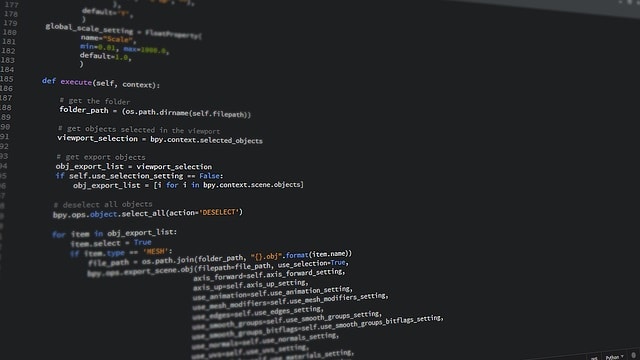

How Is Python Different Than Any Other Programming Languages?

Among many of the programming languages available today, Python is one such option that is in high demand by now. The increasing demand for the language is because it is entirely different than many other programming languages available in the market. One such difference or rather benefit is that Python is quite easy to understand and relatively simple to learn. Do you want to learn Python from scratch? Then Intellipaat Python Course is for you. Typically, selecting a proper programming language depends on many factors, such as training, availability, cost, emotional attachment, and prior investments. But these factors are also variable, and hence there are other factors on which the selection of the correct programming language highly depends. Some of the programming languages available in the market now are Java, PHP, C++, Perl, Ruby, Javascript, TCL, Smalltalk, and many others. Python is very much different than these languages in many ways.