Facebook Gets Pessimistic About AI

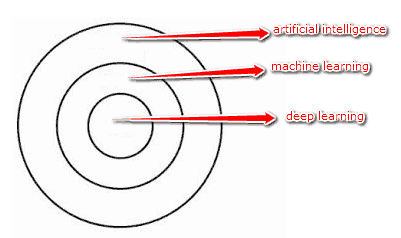

Artificial Intelligence is a field that’s developing minute by minute and day by day, all over the world. Some of the planet’s greatest scientific minds are working on its development, and we’re told that AI-led initiatives will be the next ‘big thing’ in almost everything, whether it’s personal assistants, self-driving cars, or video games. So why is it that Facebook’s Head of Artificial Intelligence thinks that the development of his specialist field is about to ‘hit a wall?’ The opinion, which comes from the highly-respected Jerome Pesenti, seems strangely timed. Only last week, we found out that artificial intelligence is going to take on a new role in the British casino scene, where it will be used to assist players who use UK online slots. The software will be used to monitor the spending habits of online slots players, and pick up on unusual betting habits. If the AI believes that the players are showing signs of impulse or erratic betting, the online slots will be locked for thirty seconds to give them the chance to reconsider their bet. Gambling wasn’t an area where the potential benefits of AI had been previously considered, and so the news was taken as…