Linear regression with multiple features

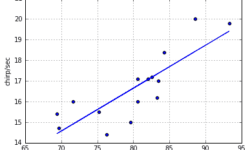

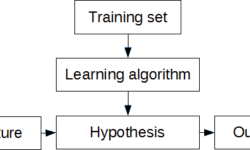

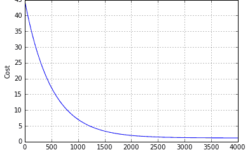

Single feature linear regression is simple. All you need is to find a function that fits training data best. It is also easy to plot data and learning curves. But in reality, regression analysis is based on multiple features. So in most cases, we cannot imagine the multidimensional space where data could be plotted. We need to rely on the methods we use. You must feel comfortable with linear algebra, where matrices and vectors are used. If previously we had one feature (temperature), now we need to introduce more of them. So we need to expand hypothesis to accept more features. From now and later on, instead of output y, we are going to use h(x) notation: As you can see, with more variables (features), we also end up with more parameters θ that has to be learned. Before we move let’s find relevant data that we could use for building machine learning algorithm. The data set Again we are going to use data set college cengage. This time we select health data set with several variables. The data (X1, X2, X3, X4, X5) are by city. X1 = death rate per 1000 residents X2 = doctor availability per 100,000…